OpenAI’s recent introduction of the O1 model marks a pivotal moment in the evolution of artificial intelligence, particularly in its advanced reasoning capabilities. Designed to emulate the analytical proficiency of PhD-level thinkers, this model employs a chain of thought methodology to address intricate queries across diverse domains such as mathematics, science, and coding. As the O1 model begins to reshape the landscape of AI applications, its implications for both personal and professional use merit closer examination. What remains to be seen is how these advancements will influence user engagement and expectations moving forward.

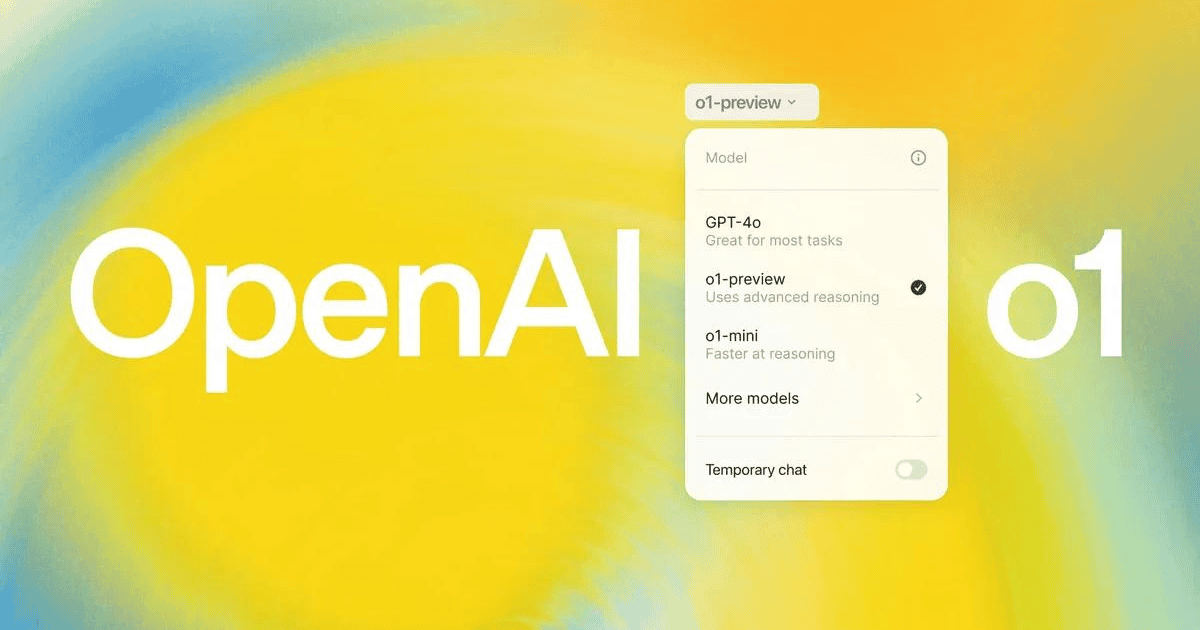

Overview of the O1 Model

The O1 model, previously codenamed Strawberry, represents a significant leap in artificial intelligence capabilities, particularly in its handling of complex queries. This innovative model is tailored to enhance reasoning capabilities, allowing it to tackle multifaceted problems with a depth of understanding that rivals the performance of PhD students in various benchmark tasks across disciplines such as mathematics, science, and coding.

The O1 model employs a chain of thought approach that enables it to evaluate multiple strategies for problem-solving before generating responses. This systematic evaluation enhances its reasoning process, leading to more accurate and context-aware interactions.

Unlike its predecessor, GPT-4o, the O1 model does not incorporate real-time web browsing or API functionalities; however, it excels in multi-step problem-solving, making it a robust tool for users requiring reliable assistance.

Additionally, the series includes a lighter version, O1-mini, designed for rapid responses specifically in STEM-related inquiries while maintaining high standards of reasoning.

Key Features and Enhancements

Notable advancements characterize the key features of the O1 model, particularly its innovative chain of thought approach that enhances query processing. This new model demonstrates considerable improvements in handling complex problem-solving tasks, which meaningfully reduces the occurrence of hallucinations compared to its predecessors.

The O1 model has achieved remarkable performance, scoring 83% on an International Mathematics Olympiad qualifying exam, underscoring its advanced reasoning capabilities in mathematics and science. Its proficiency extends to benchmark tasks in physics, chemistry, and biology, where its performance aligns with that of PhD students, showcasing its high level of reasoning ability.

Additionally, the model incorporates a new safety training approach, enhancing adherence to guidelines and achieving an impressive score of 84 out of 100 on challenging jailbreaking tests, reflecting a commitment to minimizing harmful outputs.

Designed to coexist with existing models like GPT-4o, the O1 model particularly excels in coding tasks, offering improved performance while omitting certain functionalities such as web browsing and file uploads. These enhancements position the O1 model as a powerful tool for users seeking reliable and contextually aware AI interactions.

Advanced Reasoning Capabilities

With the introduction of the O1 model, users can now experience a remarkable leap in advanced reasoning capabilities. This model employs a chain of thought approach, allowing it to dissect complex problems methodically and evaluate multiple steps before delivering responses. This enhancement greatly improves its performance in understanding intricate queries and drawing logical conclusions.

The O1 model’s advanced reasoning is particularly evident in its impressive scores on standardized assessments, such as an 83% on an International Mathematics Olympiad qualifying exam, showcasing a level of proficiency comparable to that of PhD students. Additionally, its ability to tackle technical tasks is demonstrated by achieving the 89th percentile in online programming contests, highlighting its adeptness in problem-solving across various domains.

Designed with a focus on reducing hallucinations and improving reliability through reinforcement learning, the O1 model guarantees that users receive accurate outputs when addressing complex problems in fields like science and engineering.

This sophisticated reasoning capability positions the O1 model as an invaluable tool for both casual users and professionals, enhancing the overall user experience and effectiveness in various applications.

Applications for Users

Users can leverage the advanced reasoning capabilities of the O1 model across a variety of applications that cater to both personal and professional needs.

Designed specifically for ChatGPT Plus and Team users, the O1 model excels in processing complex queries, particularly in fields such as math, science, and coding. This makes it an invaluable resource for students, researchers, and professionals who require accurate and context-aware responses.

The O1 model’s enhanced reasoning features facilitate deeper understanding and engagement, allowing users to tackle intricate problems with confidence.

Developers can also tap into the O1 model through OpenAI’s API, enabling integration into various applications and workflows, which enhances productivity in professional environments.

Additionally, the introduction of the O1-mini version provides an even more accessible solution for users needing quick responses to STEM-related queries, broadening the scope of its applicability.

As users start accessing the O1 model from September 12, 2024, the potential for improved outcomes in both personal projects and professional tasks is significant, setting a new benchmark for AI-assisted problem-solving.

Performance Comparison

The introduction of the O1 model marks a pivotal advancement in AI performance, particularly in the domains of mathematics and programming. Its enhanced reasoning capabilities allow it to tackle complex problems with a level of proficiency previously unattainable by prior models.

In a recent performance evaluation, the O1 model excelled in various assessments, achieving notable scores that highlight its superiority. Below is a comparison of the O1 model’s performance against its predecessor, GPT-4o:

| Model | Mathematics Performance | Programming Performance |

|---|---|---|

| O1 | 83% on International Mathematics Olympiad Exam | 89th percentile in online programming contests |

| GPT-4o | 13% on International Mathematics Olympiad Exam | Not specified |

The O1 model has demonstrated reasoning performance on par with PhD students in complex subjects such as physics, chemistry, and biology. While its ability to solve intricate problems is commendable, the model does exhibit slower response times compared to earlier iterations, reflecting its more deliberate thinking process. Overall, the O1 model establishes a new benchmark in AI performance and reliability.

Future Developments

Major advancements are on the horizon for the O1 model, as OpenAI prepares to disclose a larger version that promises to elevate its capabilities beyond the current o1-preview. This new iteration is expected to enhance its thinking capabilities, allowing for even more sophisticated interactions and an improved understanding of user queries.

In addition to the anticipated upgrade, OpenAI is committed to continuous improvements across both the O1 series and the GPT series. Future developments will introduce exciting features such as browsing and file uploads, which are poised to greatly expand the models’ functionality.

This evolution reflects OpenAI’s dedication to pushing the boundaries of AI technology, ensuring that the models remain relevant and powerful tools for users.

Moreover, user feedback will play an essential role in shaping the future of the O1 models. By incorporating real-world usage insights, OpenAI aims to create AI solutions that meet the diverse needs of its users.

As the landscape of AI continues to evolve, the new O1 model is set to redefine expectations, driving forward the conversation around advanced reasoning and user-centric design.

How to Access the O1 Model

Accessing the O1 model has been streamlined to accommodate various user groups, reflecting OpenAI’s commitment to making advanced AI capabilities widely available. As of September 12, 2024, the O1-preview model is accessible to ChatGPT Plus and Team users, marking the initial phase of its rollout. This advanced model, known for its enhanced reasoning capabilities, allows users to engage in deeper and more meaningful interactions.

In the following week, enterprise and educational users are also expected to gain access to the O1 model, broadening its reach. OpenAI plans to further extend access by introducing the O1-mini version for free users, although the specific release date remains unannounced.

Users can experiment with the O1 models via OpenAI’s API, facilitating customized applications and the testing of its advanced reasoning skills.

It is important to note that there are weekly message limits set at 30 for O1-preview and 50 for O1-mini, which guide usage for those accessing these innovative models. This structured approach guarantees that all users can benefit from the capabilities of the O1 model effectively.

Final Thoughts

The O1 model heralds a new dawn in artificial intelligence, illuminating pathways previously shrouded in complexity. With its advanced reasoning capabilities, it emerges as a formidable ally in the domains of mathematics, science, and coding. As users navigate the intricate landscape of knowledge, the O1 model stands as a lighthouse, guiding them toward clarity and understanding. The future promises even greater innovations, ensuring that the evolution of AI continues to reshape the horizons of possibility.